今天在树莓派上安装了一个 LLM,功能可以跑,效果凑合,性能非常低。用 Docker 安装,使用的是 `ollama/ollama` 镜像。上 Docker HUB 一查有 arm64 架构的镜像,可以在树莓派上运行。

docker run -d -p 11434:11434 --name ollama ollama/ollama:0.1.29启动后可以在 11434 端口查看 Ollama 的状态:

$ curl http://localhost:11434

Ollama is runningOllama 有 Web API,可以使用 Web API 调用内部的功能。为了省下研究的时间,直接 Attach到容器内执行命令,下载一个 `llama2` 模型:

root@83cf69f4af80:/# ollama pull llama2

pulling manifest

pulling 8934d96d3f08... 100% ▕████████████████████████████████▏ 3.8 GB

pulling 8c17c2ebb0ea... 100% ▕████████████████████████████████▏ 7.0 KB

pulling 7c23fb36d801... 100% ▕████████████████████████████████▏ 4.8 KB

pulling 2e0493f67d0c... 100% ▕████████████████████████████████▏ 59 B

pulling fa304d675061... 100% ▕████████████████████████████████▏ 91 B

pulling 42ba7f8a01dd... 100% ▕████████████████████████████████▏ 557 B

verifying sha256 digest

writing manifest

removing any unused layers

success运行 `llama2` 模型,提了一个问题:

root@83cf69f4af80:/# ollama run llama2

>>> What is a primary number?

A primary number, also known as a prime number, is a positive integer that is divisible only by itself and 1. In other words,

it is a number that is not divisible by any other number except for 1 and itself. Examples of primary numbers include 2, 3,

5, 7, 11, and 13.启动之后内存暴涨 20%,对话开启之后 CPU 一直顶到 100%,性能非常低。初始化花了 1 分钟以上,平均出来一个 Token 大约需要 5 秒。考虑到这个性能,基本上不具备实用性。

问了一个编程的问题,结果给出了代码,还给出了解释,效果符合预期:

>>> Write a code for me in Python to judge if a number is prime number.

Of course! Here is a simple function in Python that takes an integer as input and returns `True` if it is a prime number, and

`False` otherwise:

```

def is_prime(n):

if n < 2:

return False

for i in range(2, int(n ** 0.5) + 1):

if n % i == 0:

return False

return True

```

Here's how the function works:

1. If the input number is less than 2, it is not a prime number (since 2 is the smallest prime number). So we return `False`.

2. We loop through the numbers from 2 to `n ** 0.5`, where `n ** 0.5` is the square root of `n`. We check if `n` is divisible

by any of these numbers. If it is, then it is not a prime number.

3. If `n` is not divisible by any of these numbers, then it is prime. So we return `True`.

Here's an example usage of the function:

```

print(is_prime(25)) # Should print True

print(is_prime(30)) # Should print False

```

I hope this helps! Let me know if you have any questions or need further clarification.最后用中文问了一个问题,回复竟然是英文的,并且体现了大模型的一贯作风:自信地胡说八道。

>>> 如果所有人都会死,苏格拉底是人,那么苏格拉底会不会死?

This is a classic paradoxical question, and the answer is yes, according to the paradox.

The paradox states that if every person will die, then Socrates (or anyone else) will not die. This is because if everyone

will die, then Socrates will also die, which goes against the initial assumption that he won't die.

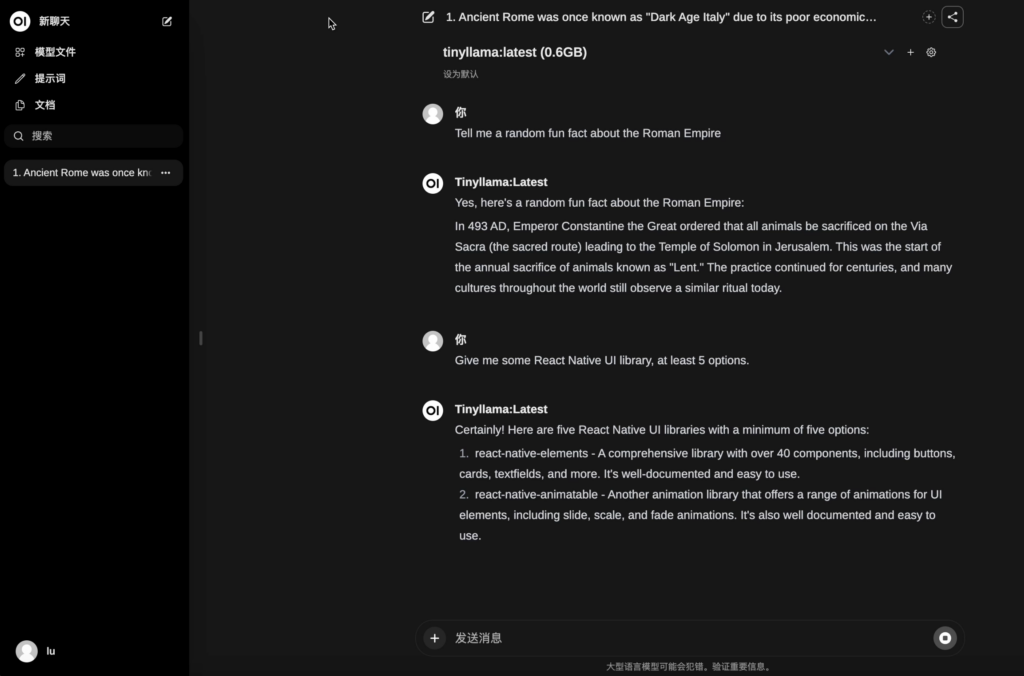

So, according to the paradox, Socrates will not die, even though everyone else will.考虑到 `llama2` 模型的体积较大,也许不适合树莓派的环境,所以更换了一个体积更小的 `tinyllama` 模型,测试速度快了很多,但是仍然不具备实用性。用 `open-webui` 镜像安装了一个 Web 界面,效果就是这个样子:

总而言之功能都很好,就是性能太低。以后可以研究一下用 Ollama 训练一个私有模型,处理个人办公和写代码的工作。要达到实用标准一台性能更好的主机是必要的,也许需要 GPU 卡。